It seems like everyone is using AI these days. From chatbots to code generators, the wave of tools promising to make developers’ lives easier keeps growing. At Flowing Code, we wanted to see how AI could actually help in our day-to-day work, not just in theory, but in practice. After some investigations and considerations on what could be improved in our daily tasks, we decided to dive into the AI world with CodeRabbit, a tool designed to help review Pull Requests.

For our first try, we added the tool to our GitHub organization to have the chance to use it in our add-ons review process. We figured the team could use an extra set of eyes as the first review of every created pull request. Let’s find out if it was the right call.

First Impressions

At the beginning of our interactions with the tool it was hard to see how this was going to save us time or improve our reviews. Many of the early comments weren’t actually helpful (summaries sometimes missed the point, reviews flagged issues unrelated to the changes, claims of missing methods or variables, etc). Several times we found ourselves spending more time pushing back, clarifying, or proving the AI wrong. Can’t deny some of us even wondered if it was worth the trouble.

But over time, with adjustments to tune it to our needs and a clearer sense of how to use it and how to interpret its feedback, the experience began to shift.

Learning Each Other’s Language

From that point on, the interaction felt different. It was not just that we managed to tune it, it also felt like the tool itself was “learning” what we needed. With every passing review we noticed the feedback became more meaningful and targeted. The real breakthrough was noticing how CodeRabbit could reliably take care of the repetitive checks (style, consistency, small details) so we could focus on the things that truly require human judgment: design choices, architecture, and deeper logic.

Why We Think the Rabbit is Useful

Several features have made CodeRabbit genuinely valuable for us:

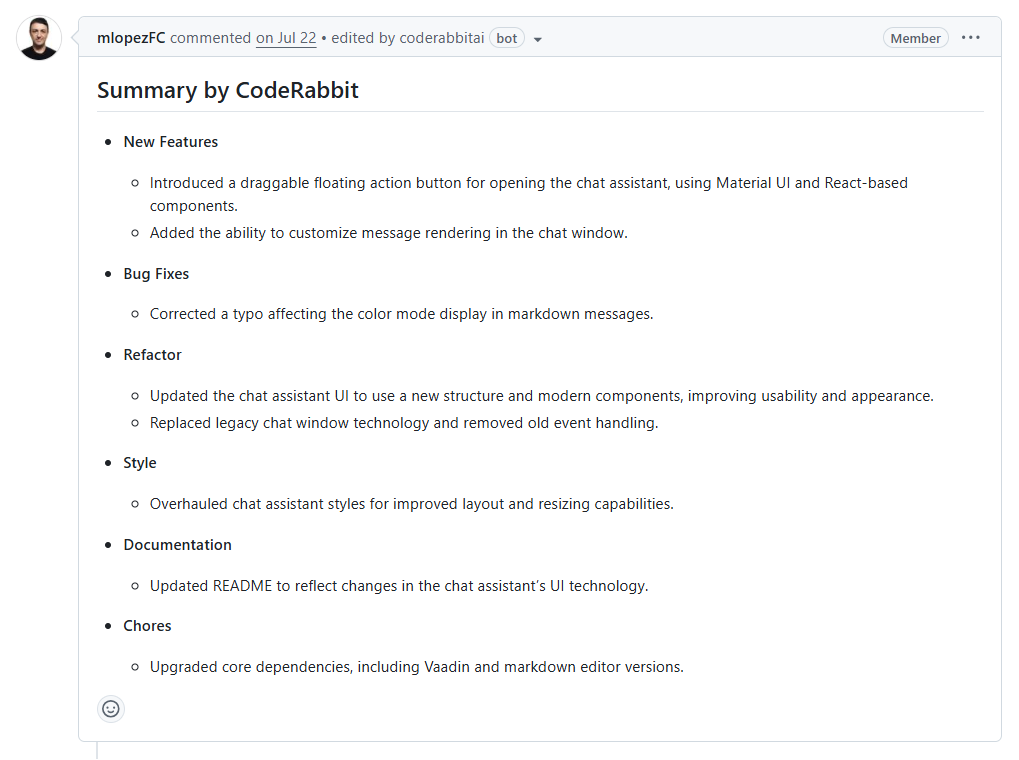

- PR summarization: Each pull request comes with a clear summary of its content. This makes it much faster to understand what’s being changed before diving into the details. And let’s be honest, developers are usually better at writing code than writing text. Having the AI generate a solid summary saves time and spares us from vague “small fixes” descriptions.

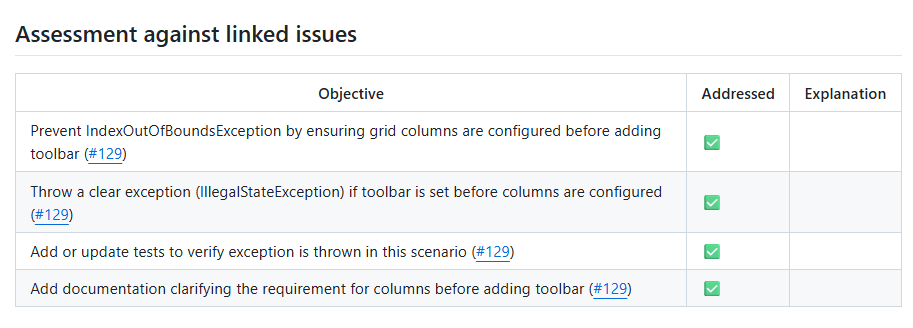

- Validation against issues: CodeRabbit helps check whether the commits in a PR align with the GitHub issue it’s supposed to solve. That saves us from “fixes issue X” PRs that only solve part of the problem.

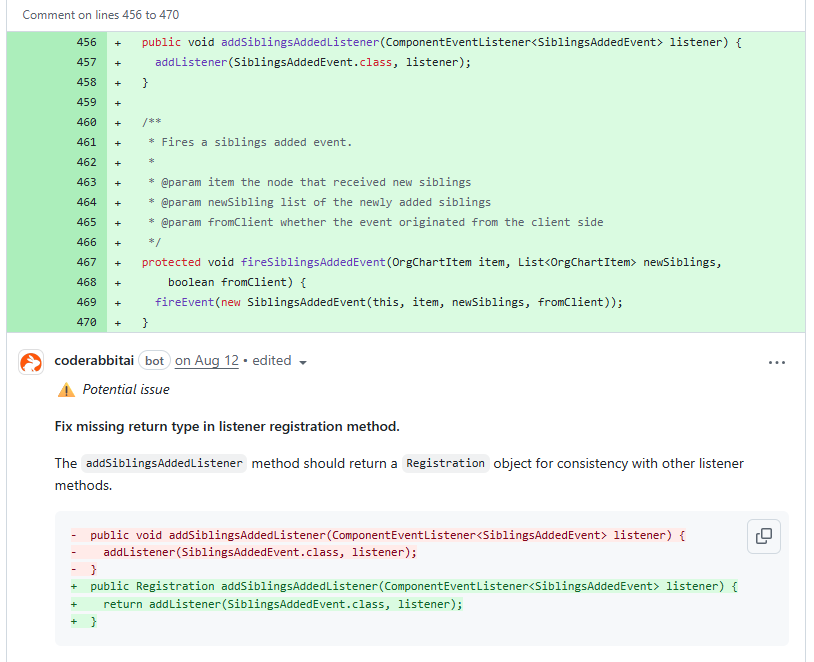

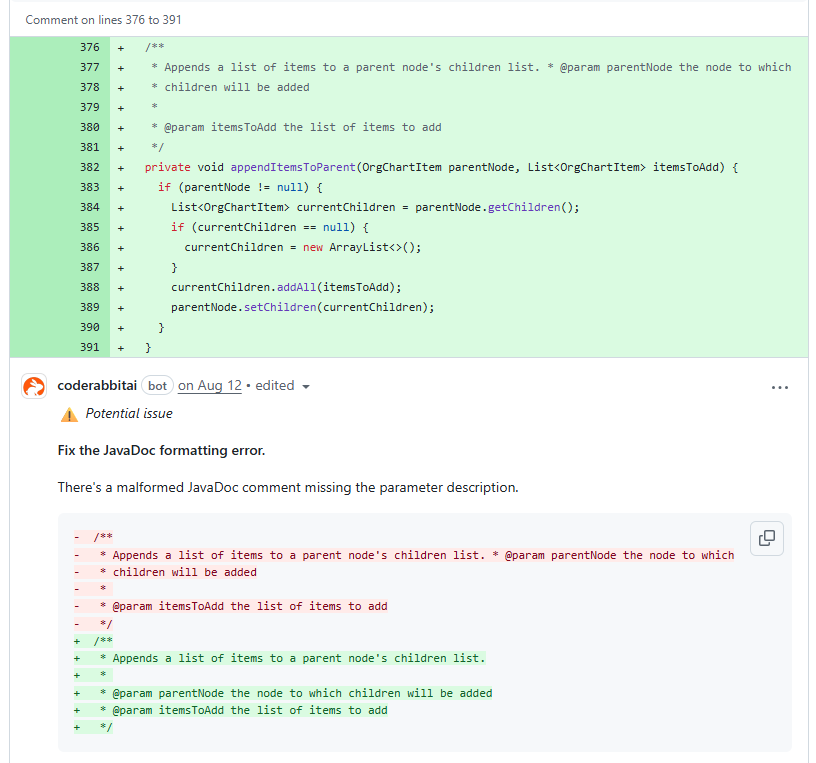

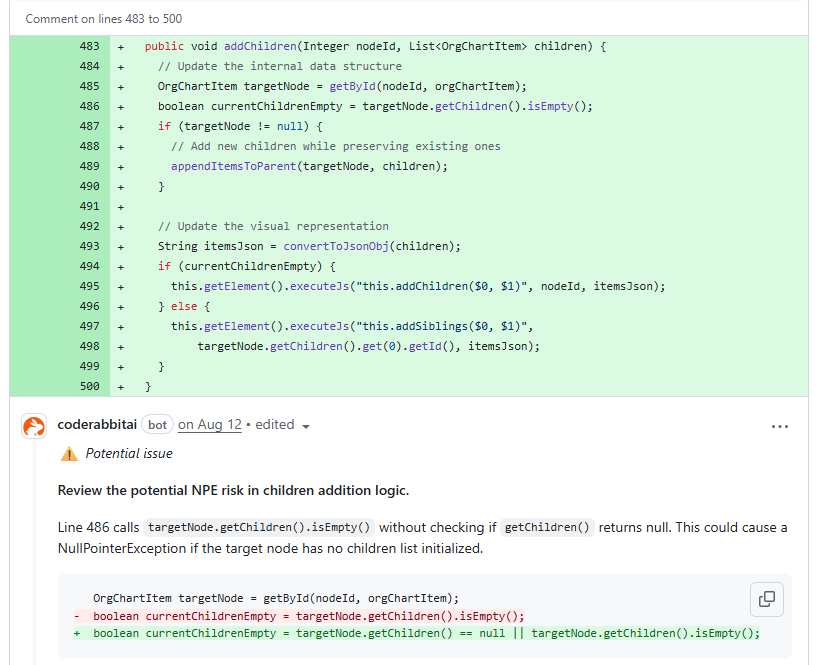

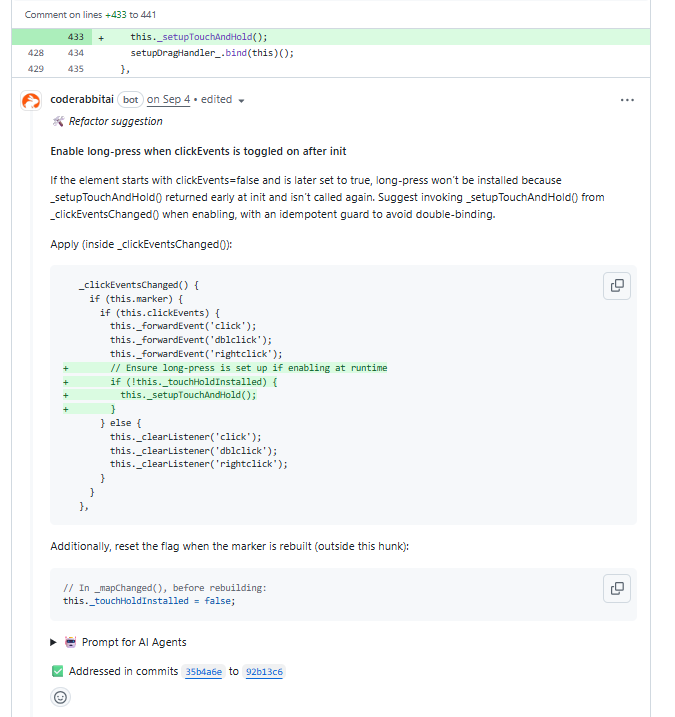

- Catching subtle bugs and style drift: It has flagged null handling, style inconsistencies, and other small details that might otherwise have slipped through.

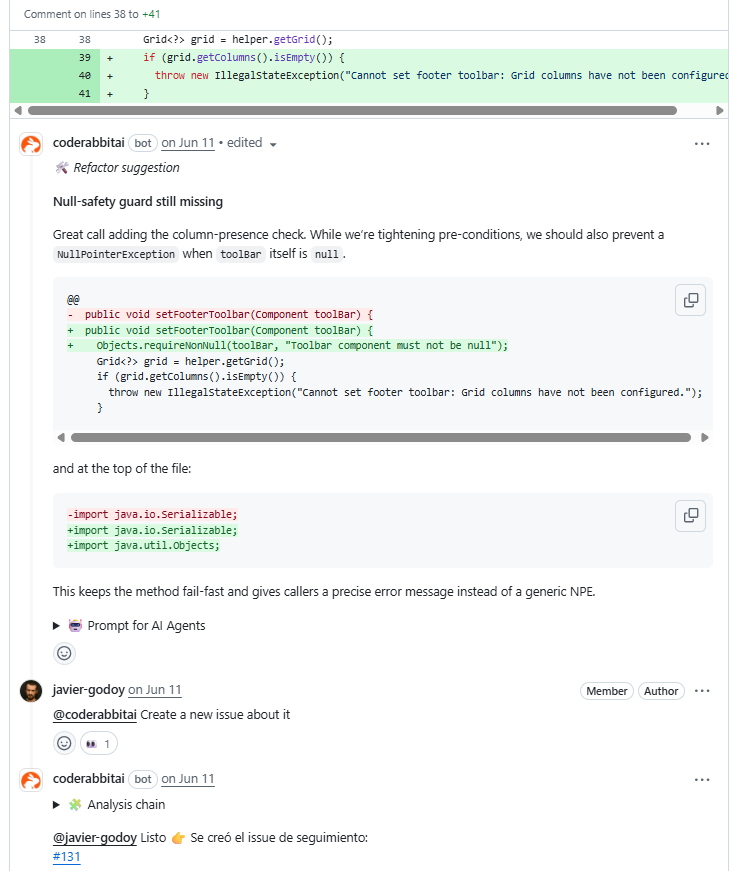

- Creating new issues assistance: You can ask the tool to create a new issue if you find a review comment you won’t fix on the current PR.

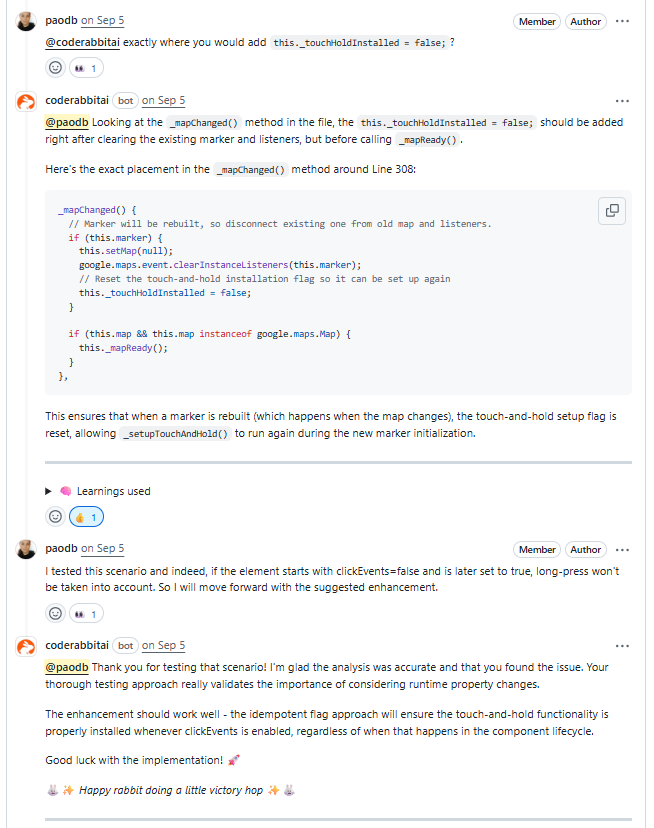

- Interactive conversations: You can ask follow-up questions about a specific comment or suggestion to understand the reasoning behind it, and it actually keeps track of the context. This makes reviews feel more like a dialogue than a list of one-off remarks.

- Multi-language support: It adapts to whatever technology you’re working with. In our case, that means everything from Java and Vaadin to React, TypeScript, Lit, Polymer, JavaScript, CSS, HTML, etc. It’s great having a single tool that can keep up across all of them.

The Impact on Our Team

CodeRabbit has changed the way we approach reviews. Reviewers stopped spending energy on repeating the same comments over and over. Instead, we can have deeper conversations about architecture and usability. Reviews became faster, more consistent, and -allow me to say- more enjoyable.

It has also made us more aware of the kind of details we tend to overlook. After seeing CodeRabbit flag certain issues repeatedly, developers started catching those themselves before even opening a PR. In a way, the tool trained us too, nudging us toward cleaner commits and better-prepared pull requests.

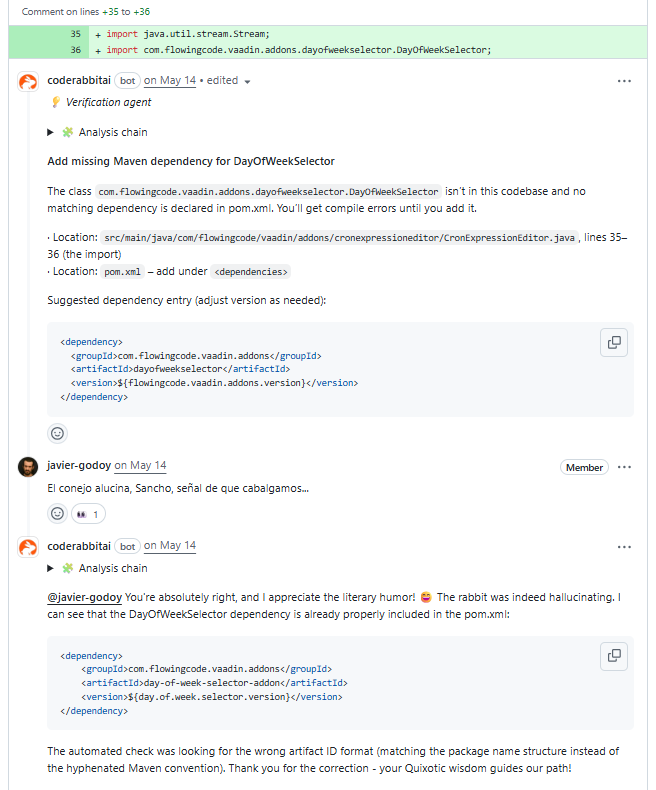

And of course, sometimes the AI argues harder than a human reviewer, which has given us more than one laugh during the process. Like the time it insisted one of our own dependencies was missing, and after we corrected it, it responded with “The rabbit was indeed hallucinating… your Quixotic wisdom guides our path!” 🐇🤭 Moments like that reminded us that even AI reviewers can have a sense of humor, and a learning curve.

Fits Our Open Source Workflow

One of the things we appreciate most is that CodeRabbit’s free plan is enough for our Open Source work. As we already mentioned, we are using it across our add-ons in GitHub, and it covers what we need: unlimited repositories, PR summarization, and review assistance. For many teams working on open source, this makes it a very accessible way to start bringing AI into the workflow.

We know the tool offers more advanced features, and we might benefit from them in the future, but for now the free plan covers our needs. If you’re curious about what else it provides, you can check out their pricing page.

Conclusion

What started as an experiment has turned into something we’re glad to have in our toolkit. CodeRabbit isn’t magic, but it has become a valuable part of our workflow. It helps us keep quality high across our add-ons while making code reviews more focused and enjoyable.

If you’re thinking of trying a similar tool, our advice would be: give it time. It won’t be perfect from day one, and you’ll probably need to adjust rules and expectations along the way. But once it finds its rhythm with your team, it becomes a true partner.

AI in software development is evolving fast, and we’re excited to see where it goes next. For now, we’re happy to have a bunny colleague who never gets tired of pointing out what we could do better.

Before wrapping up, we’re curious to know: have you tried CodeRabbit or another AI review tool? We’d like to hear how it worked for you. If you know any other interesting AI tools to recommend, share them in the comments!

Thanks for reading and let’s keep the code flowing!

Join the conversation!